5 Proven Techniques to Fix Heteroskedasticity Autocorrelation Regression Problems

Excerpt: Discover 5 proven techniques to handle heteroskedasticity autocorrelation regression issues effectively. Ensure valid inference and enhance your model’s accuracy.

Introduction

In econometric modeling, heteroskedasticity autocorrelation regression issues can undermine the reliability of inference. When the error terms in a regression exhibit non-constant variance (heteroskedasticity) or are serially correlated (autocorrelation), your standard errors become biased and hypothesis tests unreliable. This article introduces key techniques to detect and correct these issues, helping you ensure model accuracy and robustness.

Why Heteroskedasticity Autocorrelation Regression Matters

Ordinary Least Squares (OLS) regression relies on key assumptions: homoskedasticity and absence of autocorrelation. Violations of these assumptions lead to:

- Misestimated standard errors

- Inflated Type I or Type II errors

- Inaccurate hypothesis testing and misleading conclusions

Heteroskedasticity autocorrelation regression issues are particularly common in time series and panel data models, where dynamics across time or heterogeneity across entities often exist.

How to Detect Heteroskedasticity and Autocorrelation

Diagnosing these problems is crucial before applying remedies. Use the following statistical tests:

- Breusch-Pagan Test: Detects heteroskedasticity by regressing squared residuals on the explanatory variables.

- White’s Test: Offers a more general check for heteroskedasticity.

- Durbin-Watson Test: Identifies first-order autocorrelation in residuals.

- Breusch-Godfrey Test: Suitable for testing higher-order serial correlation.

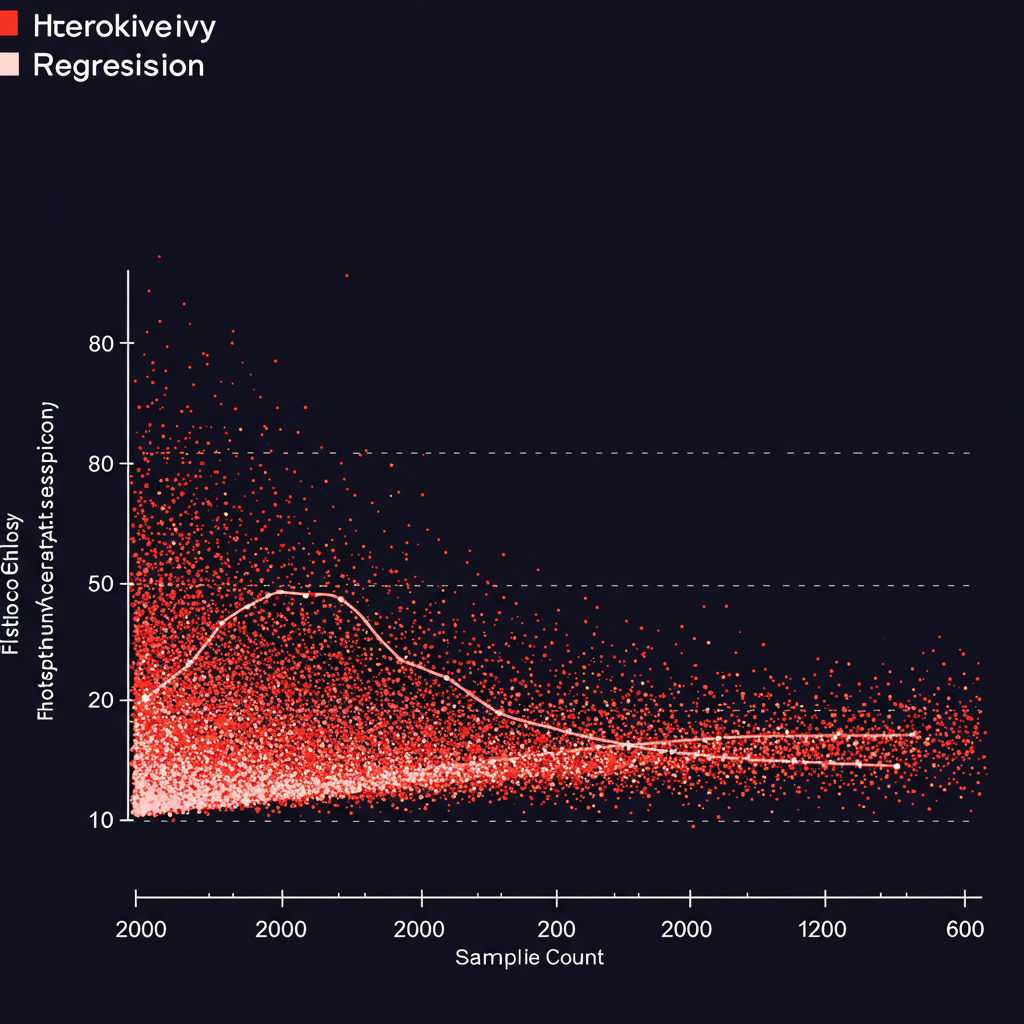

Visual diagnostics like residual-vs-fitted plots and autocorrelation function (ACF) graphs further strengthen detection of heteroskedasticity autocorrelation regression anomalies.

5 Proven Techniques to Fix Heteroskedasticity Autocorrelation Regression

1. Use Robust Standard Errors

Robust standard errors (e.g., White’s heteroskedasticity-consistent standard errors) can correct for non-constant error variance, especially in cross-sectional models where heteroskedasticity is common.

2. Apply Generalized Least Squares (GLS)

GLS and Feasible GLS (FGLS) explicitly model the structure of heteroskedasticity and autocorrelation, resulting in more efficient estimators for heteroskedasticity autocorrelation regression problems.

3. Use the Newey-West Estimator

The Newey-West estimator adjusts for both heteroskedasticity and autocorrelation, particularly in financial and macroeconomic time series. It’s a popular solution when the error structure is unknown but likely problematic.

4. Transform the Regression Model

Log-transforming variables, differencing time-series data, or including interaction terms can mitigate both heteroskedasticity and serial correlation. These transformations help stabilize variance and reduce persistent correlations.

5. Add Lagged Dependent Variables

Including lagged values of the dependent variable often absorbs autocorrelation in time series regressions. Be mindful of endogeneity issues and consider using instruments or system GMM in panel data models.

Extra Tips for Heteroskedasticity Autocorrelation Regression

Improve Model Specification

Incorrect model specification is a major source of heteroskedasticity autocorrelation regression problems. Always examine whether key explanatory variables are missing or whether nonlinearities are ignored. Introducing relevant interaction terms or spline functions can also improve specification.

Bootstrap Standard Errors

When heteroskedasticity and autocorrelation are severe or the sample size is small, consider bootstrapping to generate more accurate confidence intervals. This non-parametric method does not rely on distributional assumptions and enhances inference robustness.

Compare Multiple Models

It’s useful to estimate the same regression under multiple correction techniques—such as robust SEs, GLS, and Newey-West—and compare the standard errors and significance levels. This helps validate that your corrections are consistent and improves model credibility.

Tools for Addressing Heteroskedasticity Autocorrelation Regression

Implement these techniques using:

- R: Packages like

lmtest,car, andsandwichsupport diagnostics and robust corrections. - Python: Use

statsmodelsfor tests and HAC standard errors. - Stata: Commands like

regress, robustandneweyoffer built-in correction options.

Explore the Statsmodels regression diagnostics or Stata regression manual for detailed examples.

Conclusion

Fixing heteroskedasticity autocorrelation regression problems is not just a technical adjustment—it’s essential for producing valid and credible econometric analysis. Apply robust standard errors, GLS, Newey-West estimators, transformations, and lagged variables to ensure inference quality. Each technique addresses different symptoms, so choose wisely based on your data characteristics and model objectives.

If left unchecked, heteroskedasticity autocorrelation regression issues can severely bias regression output and compromise real-world decision-making. Take a comprehensive approach using multiple tools and always validate your model post-estimation.

Read more on our robust regression techniques page for deeper applications in real-world research.